The development of the Interoperable Physics Driver (IPD) is being funded by the Next Generation Global Prediction System (NGGPS) program as a means to facilitate the research, development, and transition to operations of innovations in atmospheric physical parameterizations. A series of continuous development works have been done on IPD by the NOAA Environmental Modeling Center(EMC), Geophysical Fluid Dynamics Laboratory (GFDL), Global Model Test Bed (GMTB). Since the IPD version 2.1, it has been included and released in the NOAA Environment Modeling System (NEMS) trunk, and coupled to the Global Spectral Model (GSM) and the GFS physics suite. By the IPD version 3.0, the NEMS/GSM with IPD3 has been verified to be identical to the NOAA implementation GFS results, which creates a benchmark for the continuous development. Then the IPD is continuously developed by GFDL to be coupled with FV3 dynamic core and GFS physics suite, which creates the latest version IPD version 4.0. The main focus of this documentation is the implementation of the latest IPD version (IPD4) within the NEMS/FV3GFS model. It is important to keep in mind that the development of the IPD is ongoing, and decisions about its future are currently being made.

In order to facilitate the research and implementation, IPD is required to be universal to all possible parameterizations in physics suites. Therefore, the IPD development is concurrent with the development of the Common Community Physics Package (CCPP), which is intended to be a collection of parameterizations for use in Numerical Weather Prediction (NWP). Each suite is accompanied by a pre/post parameterization interface, which converts variables between those provided by the driver and those required by the parameterization, in case they differ. Through this mechanism, the CCPP and IPD provide physical tendencies back to the dynamic core, which is in turn responsible for updating the state variables. The IPD and CCPP can also provide variables for diagnostic output, or for use in other Earth System models. Within CCPP, these parameterizations are necessary to simulate the effects of processes that are either sub-grid in scale (e.g., eddy structures in the planetary boundary layer), or are too complicated to be represented explicitly. Common categories of parameterizations include radiation, surface layer, planetary boundary layer and vertical mixing, deep and shallow cumulus, and microphysics. However, other categorizations are possible. More information about the physics that currently exist in the GSM, the prototype for the CCPP, and used with the IPD, please see the CCPP Documentation here: GFS Operational Physics Documentation

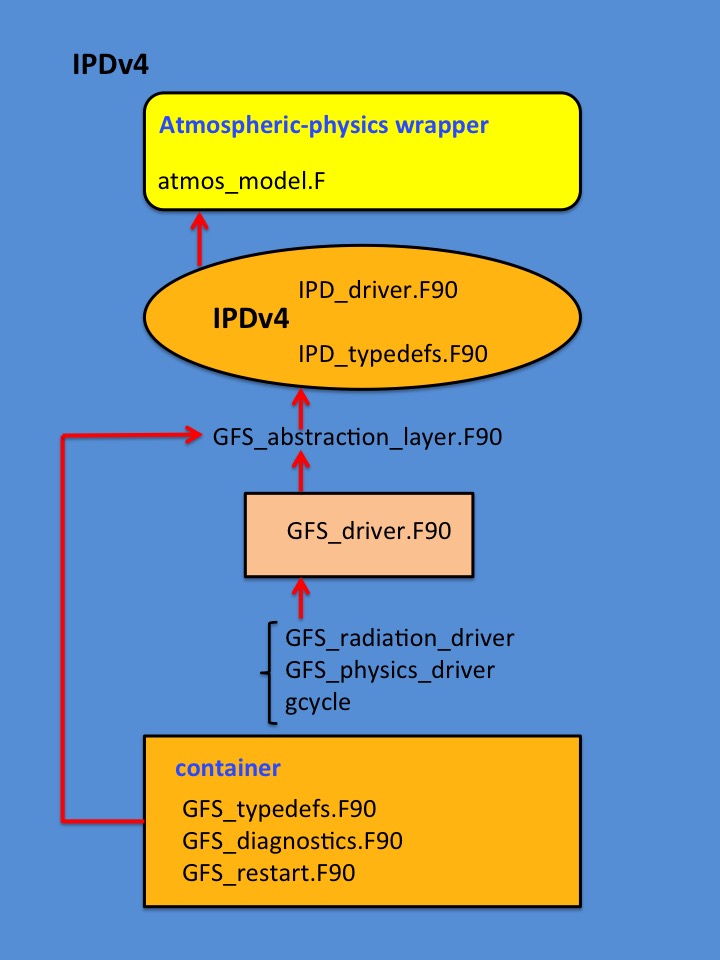

The figure below is an overview diagram of how the IPD4 is called in the GFS system.

The IPD will undergo the necessary development to accommodate the incoming physics suites and the CCPP will be designed to accommodate schemes that span multiple categories, such as the Simplified Higher Order Closure parameterization (SHOC). The parameterizations can be grouped together into "physics suites" (defined here: Definition of a Physics Suite), which are sets of parameterizations known to work well together. Indeed, accurately representing the feedbacks and interactions between the physical processes represented by the parameterizations is essential. The planned work under IPD development is to include AM4 and Meso Physics suites into NEMSFV3.

The CCPP will be designed to be model–agnostic in the sense that parameterizations contained in the package receive inputs from the dynamic core through the IPD. A pre/post physics layer translates variables between those used in the dynamic core and those required by the Driver, and perform any necessary de- and re-staggering. Currently all physics is assumed to be columnar. The notion of a patch of columns is only intended for the possibility of improving numerical efficiency through vectorization or local shared memory parallelization.

There also a plan to develop the standalone IPD. The standalone IPD will be used to invoke any set of parameterizations or suite within the CCPP through the offline way in comparing with the online running of coupled FV3 model within NEMS framework. The standalone driver will be driven by the snapshot of data from online model (NEMSFV3). It will provide an alternative way to test the physics suites. The process will be simple, but the effect will be close to using the online model.

The IPD is expected to interact with any set of physics and any dynamic core, thus several requirements are needed to satisfy this interaction. Because of its purpose as a Community tool to promote research with operational models and foster transition of research to operations, it is imperative that requirements also be placed on the physics parameterizations.

These requirements are stated explicitly here:

Interoperable Physics Driver and Common Community Physics Package (CCPP): Goals and Requirements

It is important that the IPD is able to support a physics suite as an identifiably distinguishable entity from an arbitrary group of physical parameterizations. The distinction between physical parameterization and physics suite is made as follows.

A physical parameterization is a code that represents one or more physical processes that force or close model dynamics. It is defined by the code implementation of the mathematical functions comprising the scheme, and not by a particular set of parameters or coefficients that could be set externally.

A physics suite is a set of non-redundant atmospheric physical parameterizations that have been designed or modified to work together to meet the forcing and closure requirements of a dynamical core used for a weather or climate prediction application. A set of physical parameterizations chosen to be identified as a suite results from the needs and judgements of a particular user, developer, or group of either.

In some cases, a suite may be identified as a benchmark or reference set of physical parameterizations, against which variations can be tested. Since a suite can be configured in different ways for different applications by modifying its tunable parameters, an accompanying set of tunable parameters should be specified when defining a reference implementation or configuration of a physics suite.

In the context of NGGPS, a Physics Review Committee will be established to determine which physical parameterizations should be accepted onto the Common Community Physics Package, and which physics suites should be identified as such.

An ensemble physics suite is a collection of physics suites as defined above, and may be implemented as part of multi-physics ensemble forecast system.

Currently, physics suites are only allowed to support columnar physics.

The IPD-related code utilizes modern Fortran standards up to F2003 and should be compatible with current Fortran compiler implementations. Model data are encapsulated into several Derived Data Types (DDT) with Type Bound Procedures. Most of the model arguments are pointers to the actual arrays that are allocated and are by default managed externally to the driver. The DDTs serve as containers of the passed arguments and several DDTs exist to provide some structure and organization to the data. One goal and constraint of this development was to minimize changes to existing code. The GFS currently calls multiple physics schemes as a part of its physics suite. In doing so, it passes many atmospheric variables between the dynamic core and the physics modules using an initialization procedure. This list of arguments had become unruly consisting of over a hundred variables. Through the use of the DDTs, the list was reduced to a more succinct set of required variables (on the order of 10) to be used by all of the physics modules in their interaction with the atmospheric model.

type IPD_data_type

public

type(statein_type) :: Statein

type(stateout_type) :: Stateout

type(sfcprop_type) :: Sfcprop

type(coupling_type) :: Coupling

type(grid_type) :: Grid

type(tbd_type) :: Tbd

type(cldprop_type) :: Cldprop

type(radtend_type) :: Radtend

type(diag_type) :: Intdiag

end type IPD_data_type

type model_data_in

private

real :: vara

real :: varb

end type

type model_data

private

type (model_data_in) :: data_in

type (model_data_out) :: data_out

type (model_data_inout) :: data_inout

contains

procedure :: setin => set_model_in

procedure :: setout => set_model_out

procedure :: setinout => set_model_inout

end type

The current implementation of the driver uses the following set DDTs:

The methods that belonging to each of these DDTs vary, but consist of some combination of these four:

When the DDTs are created, the variables are initially assigned to null values. Then as the set methods are create containers, the parameters (including the values of the array sizes) are defined. These array-size values are then passed into the physics routines, where the arrays are allocated.

As an example allocate the variable for the model run.

do nb = 1,size(Init_parm%blksz)

call Statein (nb)%create (Init_parm%blksz(nb), IPD_Control)

call Stateout(nb)%create (Init_parm%blksz(nb), IPD_Control)

call Sfcprop (nb)%create (Init_parm%blksz(nb), IPD_Control)

call Coupling(nb)%create (Init_parm%blksz(nb), IPD_Control)

call Grid (nb)%create (Init_parm%blksz(nb), IPD_Control)

call Tbd (nb)%create (Init_parm%blksz(nb), IPD_Control)

call Cldprop (nb)%create (Init_parm%blksz(nb), IPD_Control)

call Radtend (nb)%create (Init_parm%blksz(nb), IPD_Control)

!--- internal representation of diagnostics

call Diag (nb)%create (Init_parm%blksz(nb), IPD_Control)

enddo

All other physics arrays are allocated in a similar manner.

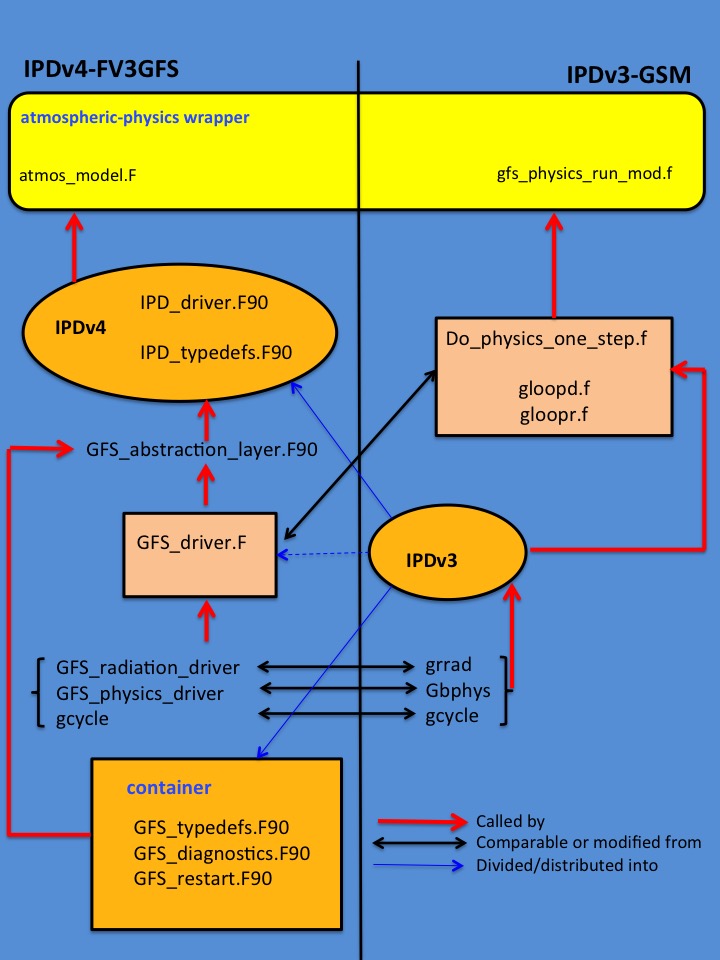

A comparison between the call trees of IPD version 4 and IPD version 3 for GFS physics can be found here in the documentation. It is noted that the IPD v4 is based on the IPD v3 structure and code, but has made important update con calling sequence. The original code in IPD v3 is distributed into IPDv4, GFS_driver, and basic code for defining containers. Code for calling physics suites are passed into and improved in IPDv4. Code for allocation and physics process calling sequence are passed in GFS_driver. Code in IPDv3 for defining DDTs is separated as container definition code. After this improvement, the function of IPD v4 is changed into a calling interface, which is more easily to keep in a constant status when connecting different physics suites.

In the current implementation of the IPD, the variables from the dynamic core (names, units, etc.) can exactly match the variables needed by the GFS physics or other physics suites. Therefore, to connect the variables between the dynamical core and the physics it is only necessary that the subroutine arguments in the calls to create containers to correctly coincide with the local input variables. For other dynamic cores and physics packages, this will likely be the similar case. The specific wrapper code like GFS_dirver creates a translation layer between the dynamic core and IPD, between the physics and the IPD, or both. The dynamic core may use variables with different units, completely different variable types (e.g. relative vs. specific humidity), different staggering, etc., and will therefore need to be translated into a form that can be used by the physics. Once the physics step is complete, the physics variables will need to be translated back into a form that can be used by the dynamic core. By the IPD v4 design, the translation layer(s) would be external to the Driver so that it may remain agnostic to the specific choice of dynamic core and physics suite. A separate implementation of the translation layer would be necessary for each unique pairing of a dynamical core and a physics suite.